Why LLMs Prefer Natural Language

Why Transformer-based language models respond more fluently and reliably to narrative input than structured formats, exploring the architectural bias toward sequential context, the cost of semantic reconstruction from JSON or YAML, and how to write prompts that align with how LLMs actually reason.

TL;DR

- LLMs are built to predict tokens in sequence, not to interpret rigid schemas

- Natural language wins because it’s rich, contextual, and self-correcting

- Structured formats (JSON, YAML, tables) increase processing cost and reduce clarity

- Even Codex, Claude, and Gemini convert structure into internal narrative before responding

- Use language as the interface, let structure support it, not replace it

Introduction

Large Language Models (LLMs) are reshaping how we generate, understand, and interact with information. From summarizing legal contracts to generating code documentation, these models have shown incredible proficiency across diverse tasks.

But there’s a subtle trap: because LLMs can parse JSON, YAML, and other structured formats, we often assume they prefer them. In reality, while LLMs can work with structured data, they operate best when the input mimics what they were built to consume: rich, natural sequences, that is, language.

This article explores why LLMs respond better to natural language than structured data, examining architectural, cognitive, and performance aspects. It also offers concrete prompting strategies, comparative examples, and technical reflections rooted in experience and architectural insight.

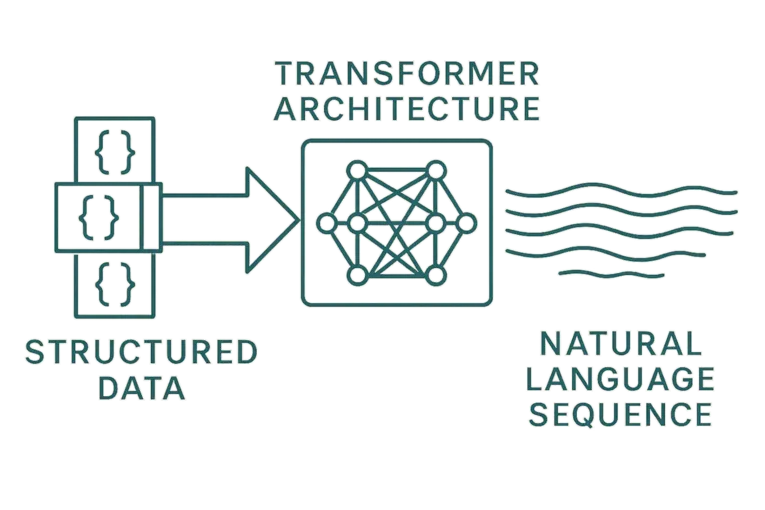

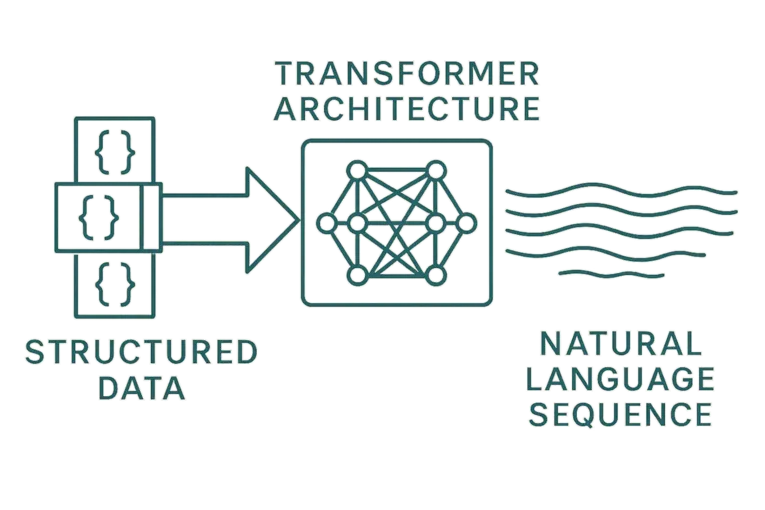

1. What Transformers Are Really Built For

Transformers, the architecture behind most modern LLMs, are designed to process sequences of tokens. These tokens can be words, characters, or subwords. What’s critical is the order and context in which they appear.

Unlike databases or compilers that work with predefined schemas and syntax trees, Transformers learn through attention mechanisms that weigh the relationship between tokens across a sequence.

They are not schema learners. They are contextual sequence learners.

Key insight: The architecture of LLMs favors rich sequential context, not because it is “linguistic”, but because it is dense in dependencies. This applies to language, but also to code, music, and even DNA (as in models like BioGPT).

Transformers were not made to understand humans. They were made to understand sequences.

2. The Nature of Natural Language

Human language is inherently redundant, ambiguous, and context-rich, and that’s exactly what makes it ideal for LLMs:

- Redundancy gives the model more chances to infer meaning.

- Contextual depth lets the model use positional information to reason.

- Narrative structure creates semantic glue between concepts.

Natural language is not just information, it’s information with flow. That flow enables the LLM to model relationships, intentions, causality, and emphasis without the need for explicit keys or schemas.

Natural language is, by definition, sequentially rich. That’s the true match with Transformer-based LLMs.

3. Structured Data as a Secondary Format

Structured formats like JSON, YAML, and tables excel at precision, but lack narrative. They are information containers, not communicative acts.

Example – JSON Input

{

"company": "Acme Inc.",

"role": "Senior Backend Engineer",

"impact": "Reduced latency by 80%",

"tech": ["Python", "Redis", "Docker"]

}

This is machine-readable, but to a language model, it’s flat. There is no flow, no hierarchy of importance, no signal of what matters most. The model must guess how to order this into human-friendly language.

Natural Language Equivalent

At Acme Inc., I worked as a Senior Backend Engineer where I led a performance overhaul that reduced latency by 80%. I used tools like Python, Redis, and Docker to implement scalable solutions.

This input is already a story. The model doesn’t need to rearrange or reinterpret the intent.

Trade-off: Processing structured (“cold”) data into narrative (“hot”) form requires more computation, more inference, and more tokens, even with an instructive prompt.

This conversion isn’t free. Even models optimized for structure (e.g., Codex, Claude, Gemini) must internally reframe the data as language to deliver nuanced, human-like output.

4. Comparative Case Study: Input Style vs Output Quality

Input A: Raw JSON

{

"role": "Platform Engineer",

"project": "CI/CD Refactor",

"result": "Build time decreased by 60%"

}

Output

“Worked on CI/CD pipelines and reduced build time.”

Very generic. No nuance. The model doesn’t infer leadership, initiative, or tooling unless explicitly prompted.

Input B: JSON + Instruction

Use the JSON below to write a 2-sentence summary for a resume:

{

"role": "Platform Engineer",

"project": "CI/CD Refactor",

"result": "Build time decreased by 60%"

}

Output

“As a Platform Engineer, I led a CI/CD pipeline refactor that decreased build times by 60%. This resulted in faster delivery cycles and improved developer productivity.”

Better, but only because the instruction triggered natural language generation.

Input C: Natural Language Prompt

As a Platform Engineer, I redesigned the CI/CD system, cutting build times by 60% and accelerating release velocity.

Output

No transformation needed. It’s already expressive, human, and high-signal.

5. Prompting Strategies for Structured Data

If you must use structured formats, guide the model explicitly:

- Add narrative prompts: “Summarize this as if explaining to a hiring manager.”

- Use hybrid formats: Markdown sections + inline JSON blocks.

- Enrich structure with signals: Use full sentences in values, not just keywords.

Hybrid Prompt Example

## Experience

**Company**: Acme Inc.

**Role**: Backend Engineer

**Highlights**:

- Refactored CI/CD pipelines → 60% faster builds

- Dockerized legacy services → improved scalability

Write a 2-sentence summary for a professional portfolio based on the above.

This combines the clarity of structure with the fluency of narrative.

Even when using structure, treat it as scaffolding, not as the source of meaning.

6. Engineering Takeaways

- LLMs are not schema-first: They perform best when the input mimics the data they were trained on.

- Text with flow outperforms data with structure when the task is generation.

- Your prompt is your preprocessor: use it to narrativize, contextualize, and signal importance.

- Structured inputs cost more: in attention span, token usage, and potential hallucination.

JSON is generally better than YAML for LLMs, not because of structure, but because of exposure. Models have seen more JSON during pretraining (GitHub, APIs, docs), while YAML is more niche (CI configs, DevOps).

If you train a model like GPT-2 or LLaMA:

- with 90% JSON/CSV and 10% language → you get a model great at schema manipulation.

- with 90% natural language → you get one better at summarization, storytelling, and fluent response.

Specialized models like Codex, Claude 3, or Gemini:

- Are aligned with structured tasks: JSON, YAML, OpenAPI, logs.

- Excel at code generation, schema inference, and structural correctness.

- But even they convert structured data into internal sequences before producing human-facing output.

So the processing cost isn’t just in output formatting, it’s in semantic construction.

Conclusion

Transformers were built for sequences, not schemas. When you want a language model to speak well, give it language, not just data.

The best outputs still come when we speak to models like we speak to humans.

Because structured data must be translated internally into language-like meaning before the model can generate nuanced, impactful text, every layer of abstraction adds cost, and potentially degrades quality.

So, if your goal is clear, persuasive, or high-signal language:

Speak in text. Feed context. Let sequence do the work.

Authored by Davi de Andrade Guides

Part of the series: “Thinking with Transformers”

Visit daviguides.github.io for more insights